The technologies involving compression have been looking for a home on z/OS for many years. There have been numerous implementations to perform compression, all with the desired goal of reducing the number of bits needed to store or transmit data. Host-based implementations ultimately trade MIPS for MB. Outboard hardware implementations avoid this issue.

Examples of Compression Implementations

The first commercial product I remember was from Informatics, named Shrink, sold in the late 1970s and early 1980s.

It used host cycles to perform compression, could generally get about a 2:1 reduction in file size and, in the case of the IMS product, worked through exits so programs didn’t require modification. Sharing data compressed in this manner required accessing the data with the same software that compressed the data to expand it.

Hierarchical Storage Manager

At about the same time IBM was providing the program interface for the Mass Storage System (MSS a.k.a. 3851) in a product called the Hierarchical Storage Manager (HSM). This product implemented a storage hierarchy for active/inactive data, and as it moved data for inactive storage, it implemented compression via a Huffman frequency Encoding Algorithm.

This algorithm was optimized for writing data so it was an excellent choice for archive and backup data. Only HSM wrote and read these files, so the implementation of this compression did not suffer any data sharing or compatibility issues.

Improved Data Recording Capability

In 1986 IBM added a hardware-based data compression option to 3480 Tape called Improved Data Recording Capability (IDRC). This option doubled the capacity of the cartridge (from 200MB to 400MB) and of course required the IDRC feature to be installed on any tape drive that was expected to read or write an IDRC compacted tape volume. IDRC has been a feature of all tape products since its introduction.

Hardware Assisted Data Compression

In 1994, IBM announced a specific offering on the ESA/390 platform to perform Hardware Assisted Data Compression (HADC) also called Compression Coprocessor (CMPSC). This announcement encompassed a specific set of optimized ES/9000 hardware instructions designed to perform a generic compression of data using the Lempel-Ziv-Huffman algorithm.

Implementations were provided for generic SAM and VSAM access methods as well as DB2. Optimal data access pattern were data sets with high read/write ratios as it was less expensive to expand the data than it was to compress and create the dictionary.

RAMAC Virtual Array

Around 1996 the STK Iceberg and later the RAMAC Virtual Array provided a DISK product featuring a Log Structured Array with external LZ1 compression. The z/OS Host didn’t know the data was compressed and this device added the complexity of monitoring the Net Capacity Load (NCL) value to ensure free space existed in the DSS for new data to be added.

All data was compressed so it was an impressive demonstration of how to haul 2-tons of manure in a 1-ton truck.

What’s new in Compression? zEDC

The newest implementation of compression on z/OS is the zEDC card. This implementation combines a variety of aspects from the previous compression implementations. zEDC is a card installed as a service processor in the System z PCIE slots and does not involve CP/ZIIP host cycles to invoke (like the IDRC or RVA compression implementations).

Unlike IDRC or RVA compression, zEDC compresses data prior to and expands after the transmission of the data on the FICON Channel. Specific information about a compressed data set is captured in the SMF 14 record type.

As a shared resource across the LPARs in a CEC, zEDC monitoring data is available in the RMF 74.9 records, but the measure of true card utilization is the aggregated use of compression by card across LPARs by interval. Sharing data across CECs involves either Software emulation (i.e. host cycles) or additional zEDC features across the CECs. Software driven implementation of compression has remained with the use of DFSMS Dataclas assignment (as with CMPSC).

As far as I can tell, generic implementation of the compression algorithm does not bias an implementation to either read or archive activity, but the low hanging fruit is the present use of DFSMS compression (and subsequently any use of HSM Hoffman compression when this data migrates or is backed-up).

zEDC meets the desired goal of reducing the number of bits needed to store and transmit data for BSAM and QSAM data. There are additional impacts elsewhere. If we are replacing one compression for another, depending on the previous compression technology used, there may or may not be a channel utilization and batch elapsed time reduction (due to fewer IOs) to be quantified.

Customers looking to implement zEDC should review IBM Informational APAR II14740.

Reviewing zEDC activity with IntelliMagic Vision

IntelliMagic Vision for z/OS is a wonderful tool to review zEDC activity. After zEDC is implemented there are likely two reports of great interest, The PCIe Card Utilization and Compression Ratios.

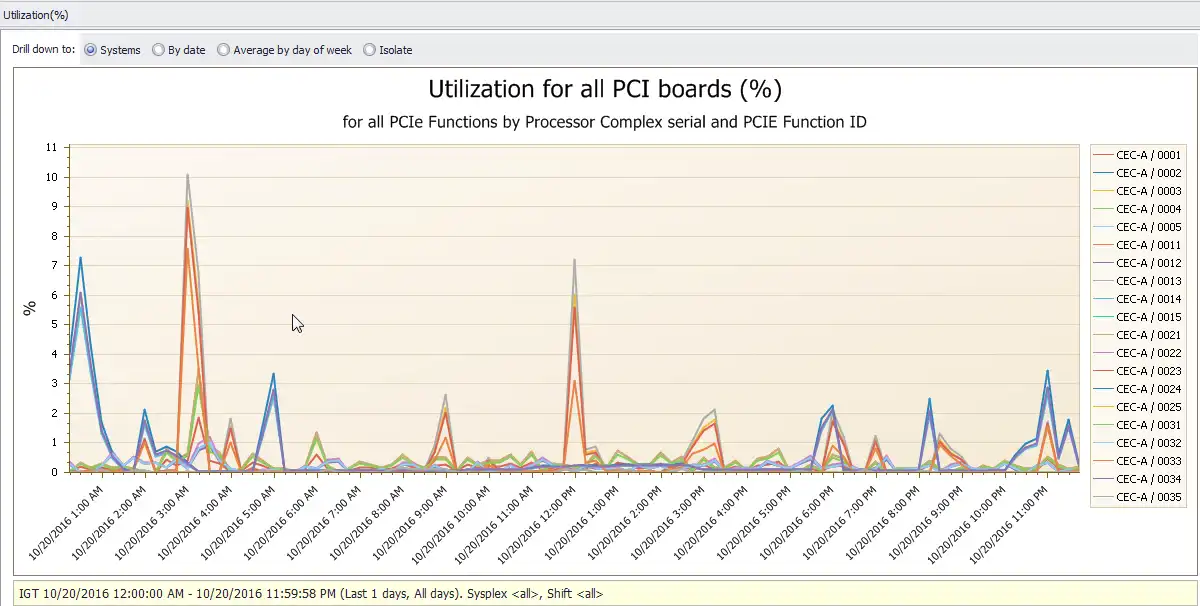

PCI Board Utilization

Figure 1: Chart showing Utilization of PCI Board by LPAR. LPARS in the CEC can share access to the PCI Card. The relationship of the PCI boards to the CECs and LPARs is described in the legend.

Let me explain the legend on this chart. The first part is the CEC that the compression card is installed followed by four digits. The first three of four digits is a card number, and the last digit is an LPAR number.

From the legend, I see that on CEC-A there are four zEDC cards installed and five LPARs utilizing each card. Since no value is higher than 10% utilization on this chart, then no card can be exceeding 50% (10%*5 LPARs) and so collectively we can’t be having greater than 50% utilization of any card. IntelliMagic Vision Development is involved with improving this reporting, so card utilization is reported directly.

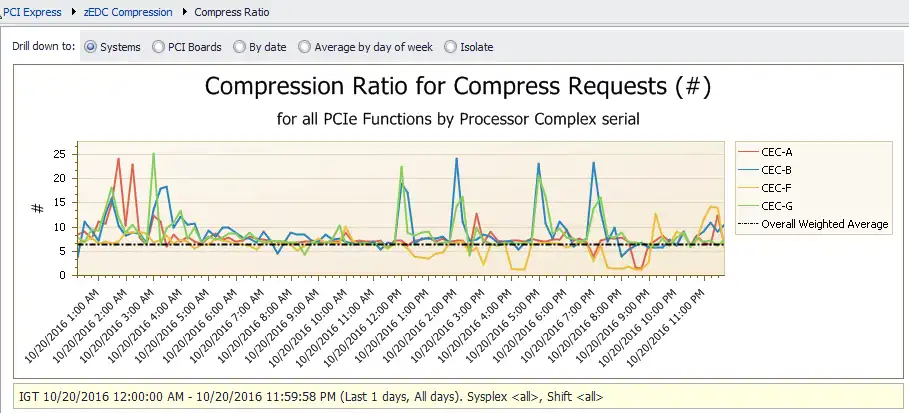

Compression Ratios

Here’s an example of an IntelliMagic Vision report of Compression Ratios:

Figure 2: Compression Ratios: this expresses the relationship of the size of the uncompressed data and the same data compressed (with the compressed data expressed as 1). The data to calculate the compression ratios is provided by RMF 74.9 records in 15 minute intervals. The variation in compression ratios relates to how effective the compression technique is on the data that’s presented.

In this example, we can see that compression ratios get as high as 25:1 but average around 6:1 or 7:1. These results are impressive if compared to e.g. IDRC. This is measuring compression ratios by request per interval, so within a data set there could be some sections of the data compressing at the maximum and other parts compressing at less than that.

Ancillary Impacts of Compression

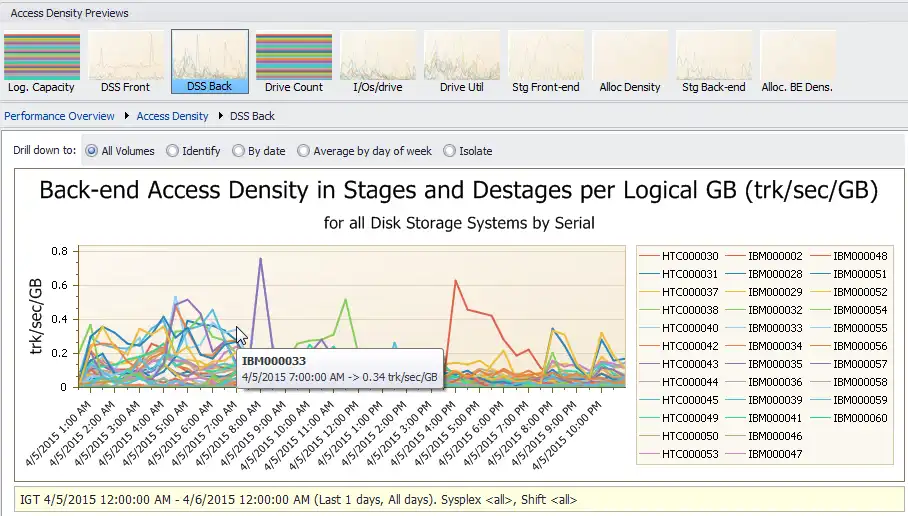

If a given implementation is not limited to selective data sets and we are now compressing data that had not previously been compressed, then expect additional impacts. For example, as was the case of the Iceberg/RVA, expect Cache Hit Ratios to improve, because we are now getting hits against compacted data, cache looks bigger. Another impact could be an increase in Access Density (IOs/GB). As Access Density increases, the use of hierarchal storage tiers and possible upgrades to faster back-end storage may be desirable to maintain expected data response times.

Here’s an example of Access Density reports available in IntelliMagic Vision. The higher the access density, the better the data would be served by Solid State or Flash storage.

Figure 3: Back-end Access Density is a measure of the data read and written to the disk over a specific capacity (in this case Tracks/Second transferred per Gigabyte). It’s a measure of back-end activity.

Conclusion: What Good is a zEDC Card?

As Compression helps you to meet your goals for reducing the number of bits needed to store or transmit data, the importance of a feedback loop in any systems implementation can’t be over stressed. Feedback is critical to improving performance. IntelliMagic Vision allows you to assess the benefit of zEDC to know how utilized the zEDC card is, if you are selecting the correct items for compression, and if the action of compression is driving the disk utilization higher.

This article's author

Share this blog

Related Resources

An Update on zEDC and the Nest Accelerator Unit (NXU)

Advancements similar to the NXU are likely to become more commonplace since raw processor speeds have plateaued. Specialized processing drives new metrics to manage an already complex system. What’s affected? How will you keep up?

zEDC Metrics Changes on z15 and z16 Processors

Learn how moving the compression function from PCIE-attached zEDC cards to the zEDC Accelerator has resulted in changes (reductions) in the metrics that are available.

Imagine How Much You Can Learn from SMF Data – Part 1

For both novices and experts, learning is greatly expedited by having easy visibility into SMF data so that all the time can be spent exploring, analyzing, and learning.

Book a Demo or Connect With an Expert

Discuss your technical or sales-related questions with our mainframe experts today

Dave Heggen

Dave Heggen