Introduction

Please watch this short video first to understand the data collection process, Data Collection Video.

This webpage serves as an overview of the recommended procedures for gaining the most out of your IntelliMagic Vision Performance Assessment. IntelliMagic will perform this analysis based on data created by IBM’s Resource Measurement Facility (RMF) and collected through the System Management Facility (SMF) data sets. In addition to the SMF/RMF data, the analysis will rely on background and configuration information provided to IntelliMagic, through various system commands or other data sources detailed in this document. We process your data by sysplex and by day, so all related data needs to be separated to this level of granularity (or lower). For more information about SMF data and interval lengths, please refer to Appendix K: SMF Intervals. For Near Real Time processing considerations, please refer to Appendix H: Near Real Time (NRT) considerations.

The following steps summarize the process detailed in this document:

| Note: You will receive an email from IntelliMagic with the following website in which you can download the RMF PACK program, SMF2ZRF JAR class file and all the sample JCL. There is no password required: www.intellimagic.com/support/rmfpack-download. The JCL to accomplish the Sort, RMFPack and FTP steps may be found in the file named, “RMFPACK.JCL.XMIT”. Please read the $$INDEX for a description of the JCL. Any questions may be directed to the support team.

Note: RMFPACK is a z/OS program utilizing zEDC for data compression. SMF2ZRF is a JAVA class which utilizes zIIPs to compress data. Either can be used for any and all file types. Jobs in the JCL library have been provided for both programs. Throughout this document, RMFPACK will refer to either RMFPACK or the SMF2ZRF JAVA class for data compression. |

FAQ

Q. How close to real time is zIntelliMagic Vision for z/OS?

A. SMF/RMF data can be sent once an hour.

Q. Can I send multiple sysplexes in the same set of files?

A. No. Ensure only one sysplex is represented in each file/set of files.

Q. Can I send multiple days of data in a single file?

A. No. IntelliMagic has automation to process the data and prefers each day of data sent separately. TS7700 BVIR is the only exception.

Q. Can I send SMF data to you without sorting first?

A. No. Each file needs to be sorted into date/time order.

Q. Can I use IBM’s terse utility to pack the RMF/SMF data

A. No. Please use IntelliMagic’s RMFPACK utility or the SMF2ZRF JAVA class

Q. What filename should I send the data to on the IntelliMagic side?

A. Use Date.HHMM.HHMM.Company.Sysplex.Record.zrf for all RMF/SMF/BVIR/DCOLLECT/FORMCATS data. Date format is yymmdd, HHMM.HHMM is the hour and minute of the beginning and ending time of the data, Company should be 8 characters or less, Sysplex should be 8 characters or less, Record should represent the data within, i.e SMF7x or SMF100. LPAR name may be added after Sysplex name if sending by LPAR. zrf is used for SMF data, zrd is for DCOLLECT, zrb is IBM BVIR data and zrt is tape management FORMCATS file.

Q. What file extension should I use for the files on the IntelliMagic side?

A. .zrf is for SMF/RMF data, .zrd is for DCOLLECT output, .zrb is for TS7700 BVIR, .zrt is for FORMCATS output of tape management files.

Q. How do I handle multiple time zones within a single Sysplex?

A. Create and send in a separate set of files for each LPAR in the Sysplex to ensure time zones are unique in each set of files.

Q. How is the SMF/RMF data’s interval determined?

A. Please refer to Appendix K: SMF Intervals.

Q. What are the best practices for interval settings?

A. Please refer to Appendix K: SMF Intervals.

Q. Can the intervals be configured differently for different LPARs (i.e. – DEV/TEST/PROD)?

A. No. Every LPAR will be processed with the longest interval value.

Q. What happens to CICS transaction data and Db2 accounting data and other SMF data that is not on an interval basis?

A. Transactional and accounting data will be summarized into intervals sync’d with RMF.

Q. Can I offload data compression to zIIPs?

A. Yes, SMF2ZRF is a JAVA class which can be used to create ZRF compressed files.

Q. What are the default retention settings for SaaS data?

A. See Appendix O: Cloud Database Retention Settings

Identify Required Data

The following tables provide guidance on the required data for each module. For a description of each module please refer to Appendix A.

-

SMF Record Types supported by z/OS Systems, Db2, CICS, WebSphere, TCP/IP, MQ and z/OS Connect modules

The following table provides the record types for the z/OS, Db2, CICS, Websphere, TCP/IP, WebSphere, MQ, and z/OS Connect modules. If these records are being generated, customers are strongly encouraged to provide them to benefit from the visibility provided by IntelliMagic Vision into these types of data.

Record Type Record Subtype Required Comments 30 (all) Address Space, ensure interval records are created by using INTVAL(nn) in the SMFPRMxx member which creates SMF interval records 42 5,6 DFSMS Statistics provide 1) Comprehensive dataset statistics with Disk module 2) Summary dataset views with Systems module and 3) Enriched views (combined with IFCID 199) with Db2 module 70-78 (all) Yes Customers are strongly encouraged to provide all RMF 70-78 records that are being generated for all sysplexes and systems to be included in the study. RMF defaults for type 77, 74.7 and 74.8 to NOENQ, NOFCD and NOESS. Update RMF to collect these subtypes to allow for IntelliMagic Vision reporting. 88 1,11 System Logger 89 (all) SCRT Usage 99 14 LPAR Topology 100,101 Db2 Statistics (100) and Accounting(101) 102 Db2 Db2 IFCID 199 – buffer pool statistics at data set level 110 1,2 CICS monitoring(1) and statistics(2). Please see Appendix J: Gathering the CICS Data Dictionary for information on gathering the data dictionary 113 1 SMF type 113.1 (Processor Cache statistics) – This is strongly recommended if you are on z/OS version 2.1 or higher. For z15, CPU MF Crypto Counters should be enabled via the following command This example collects Basic, Extended and Crypto counters – Modify HIS: “F HIS,B,TT=‘Text’,CTRONLY,CTR=(B,E,C),SI=SYNC,CNTFILE=NO” 115 1,2,215 MQ Subsystem Statistics produced with statistics trace class(1). subtype 1 – logs and storage, subtype 2 – messages, buffer and paging, subtype 215 – bufferpool 116 0,1,2 MQ Subsystem accounting produced with trace class(1,3). subtype 0 – message manager, subtype 1 – task+thread+queue level, subtype 2 – queue level. Please see Appendix K: SMF Intervals for information on setting MQ Intervals. 119 2,4,5,6,7,12 TCP/IP subtype 2 – connection, subtype 4 – profile, subtype 5,6,7 – statistics, subtype 12 – zERT. Please see Appendix M: Configuring TCP/IP for SMF 119.12 zERT Records for information on enabling collection of zERT data. 120 9 WebSphere Application Server performance statistics 123 1,2 z/OS Connect subtype 1 records contain information about each API provider request, including information about which system of record (SOR) the request was sent to. Subtype 2 records have detailed information about each API requester request, including information about what HTTP endpoint the request was sent to. To Configure SMF 123 record collection see Configuring the Audit Interceptor to enable version 2 formatted records. Note: RMF defaults specify NOENQ(77), NOFCD(74.7) and NOESS(74.8) which causes RMF to exclude SMF recording. See RMF Enqueue Activity report and Appendix L: Gathering Cache and ESS Statistics Separate from SMF data, 3 months of SCRT CSV reports are required for an MLC Assessment.

CPU MF Crypto Counters should be enabled via the following command This example collects Basic, Extended and Crypto counters – Modify HIS: “F HIS,B,TT=‘Text’,CTRONLY,CTR=(B,E,C),SI=SYNC,CNTFILE=NO”

-

SMF Record Types supported by z/OS Disk and Replication modules

The following table displays the record types for the z/OS Disk and Replication modules. If these records are being generated, customers are strongly encouraged to provide them to benefit from the visibility provided by IntelliMagic Vision into these types of data.

Record Type Record Subtype Required Comments 70-73,75,78 (all) Yes 70 – Processor Activity, 72 – Real Storage and Paging, 71 – Real Storage and Paging, 72 – Workload Activity, 73 – Channel Path Activity, 75 – Page Data Set Activity, 78 – I/O Queuing and HyperPAV Activity 74 1,2,9,10 Yes 74.1 – Device Activity, 74.2 XCF Activity, 74.9 – PCI and zEDC statistics, 74.10 – Storage Class Memory statistics. NOTE: BMC/CMF users must install BQM1600 on all LPARs to fix a known issue with IOSQ response time reporting. 74 4 Yes SMF/RMF type 74.4 (Coupling Facility statistics) records are gathered by default by the RMF Monitor III (RMFGAT) address space. There is no equivalent option in the post-processor ERBRMFxx member. 74 5 Yes SMF/RMF type 74.5 (CACHE) records are global measurements, meaning it is only necessary to collect them from a single LPAR that shares the disk subsystem(s). For SMF/RMF type 74.5 (CACHE) records, all disk volumes need to be online to the collection LPAR. Please see Appendix L: Gathering Cache and ESS Statistics for information on gathering Cache statistics. 74 7 Yes Director(s). SMF/RMF type 74.7 (FCD statistics) records require the CUP feature enabled on your FICON Directors and that the FICON STATS=YES option be enabled in IECIOSxx (default is on). Please see Appendix L: Gathering Cache and ESS Statistics for information on gathering FICON statistics. 74 8 Yes SMF/RMF type 74.8 (ESS) records are global measurements, meaning it is only necessary to collect them from a single LPAR that shares the disk subsystem(s). At least 1 volume each DSS needs to be online to the collection LPAR. To be safe ensure that you collect records from at least one LPAR in each sysplex. Please see Appendix L: Gathering Cache and ESS Statistics for information on gathering ESS Array and Link statistics. If replication is present, port type of SYNC or ASYNC must be supplied by the customer for each DSS and distance between sites(miles/km or local) 42 5,6 Yes In order to enable SMF 42 collection, it is necessary to collect the SMF 42 records from all LPAR’s (enabled in SMFPRMxx). 30 (all) Address Space 42 11 SDM/XRC, zGM: In order to enable SMF 42 collection it is necessary to collect the SMF 42 records from all LPAR’s (enabled in SMFPRMxx). In order to create the SDM reports we also need the GEOXPARM file see Appendix D: GEOXPARM 105 N/A GDPS Global Mirror 124 1 FICON Link information is written for each FICON channel, switch entry port, switch exit port, and control unit port that is accessible to z/OS. It contains information that can be used to diagnose I/O errors and performance issues that might be caused by fiber optic infrastructure issues or incorrect I/O configurations. 1 record is created every 24 hours. FICON two-byte link addresses are required. Please see Upgrading to two-byte link addresses 191 (all) Or equivalent record for HDSV Mainframe Analytics Recorder (MAR). 206 (all) Or equivalent record for EMC SRDF reports. -

SMF Record Types supported for z/OS Virtual Tape module

The following table displays the record types for the z/OS Tape module. If these records are being generated, customers are strongly encouraged to provide them to benefit from the visibility provided by IntelliMagic Vision into these types of data.

Record Type Record Subtype Required Special Notes 14,15,21, 30 74.1

(all) Yes Provides logical view of the Virtual Tape environment. RMF defaults type 74.1 to DEVICE(NOTAPE) and IOQ(NOTAPE). Update RMF to collect these subtypes to allow for IntelliMagic Vision tape reporting. BVIR N/A This file should contain the BVIR history for the IBM TS7700 devices. Please see Appendix B: Gathering BVIR data – IBM TS7700 for information on gathering the BVIR data. Specified in HSC N/A For Oracle VSM environments include the Oracle VSM hardware records as specified in the HSC. N/A N/A Tape Management Catalog: See Appendix E: Gathering Additional Tape Management Configuration Data N/A N/A MVC VSM data – the optional .XML data See Appendix E: Gathering Additional Tape Management Configuration Data

Extract

Our recommendation is to collect one or two weeks of data to ensure we have a good representation of the workload. Data should be collected during a peak period, preferably during a month-end or other peak business cycle.

To ensure data integrity, we recommend that IBM’s standard SMF extract program be used (IFASMFDP).

We provide sample JCL members for the extraction in the RMFPACK.JCL.XMIT. See the following member:

- A1SMFDP provides the JCL for dumping the records for Systems, Db2, CICS, TCP/IP, WebSphere, MQ, Disk and Tape modules

If you wish to dump an SMF log stream issue the SWITCH SMF command, which dumps the SMF data in the buffers out to the log streams. You can then use the SMF log stream dump program, IFASMFDL, to dump the specified log stream data to a data set. Use this sample JCL member which first creates START and END control cards and then executes IFASMFDL to dump the specified log stream. If you have multiple log streams, either specify multiple LSNAME control statements or execute the DUMP step for each log stream.

- A1SMFDL provides the JCL for dumping the records from the specified log stream(s)

| Note: If you are sending multiples days of data, each day should be extracted separately. If you have multiple sysplexes in your data they must be extracted into separate files. Multiple LPAR’s per file is fine, if there is only one time zone. If a sysplex contains LPARs with different time zones then each LPAR must be extracted separately. For example, if you have a sysplex named PRODPLEX and another called TESTPLEX with 2 time zones, then PRODPLEX needs to be extracted separately and each LPAR in the TEXTPLEX sysplex needs to be extracted in a separate file for each day of data sent. This procedure works for all the SMF/RMF data. |

Sort

Following extraction, the data must be sorted into date/time stamp order (just as with a standard SMF/RMF report). Our sort process will split the raw data into SMF/RMF (SMF7x), SMF type 30, 42, 100, 101, 102, 110, 115, 116, 119, 120 and tape files. If you have additional optional SMF data (i.e. 105, 124, 206) we recommend you direct that to the SMFOTH file. All data from a single Sysplex should be sorted into a single set of files. If there are multiple Sysplexes, each should have their own set of files.

We provide sample JCL members for the sort in the RMFPACK.JCL.XMIT. See the following member:

- A2SORT provides the JCL for sorting the records for Systems, Db2, CICS, TCP/IP, WebSphere, MQ, Disk and Tape modules

| Note: Multiple days of data should be split into separate files. If you have multiple time zones in your data and wish them to be merged into a single project, they must be sorted into separate files. Multiple LPAR’s per file is fine, but only one-time zone per file (7x, 30, 42, etc.). For example, if you have a sysplex named PRODPLEX and it contains multiple time zones, then you need to create separate files for each LPAR for the 7x records, and separate files for each LPAR for the 42 records. Sort data for a sysplex with a single time zone or each LPAR if the sysplex contains multiple time zones with a single A2SORT job which is found in the JCL. Run a A2SORT job per group of systems. This procedure works for all the SMF/RMF data. |

RMFPACK

Install RMFPACK on your z/OS System

Download and extract rmfpack to create three XMIT files. You need to transfer the transmitted binary RMFPACK.LOAD.XMIT (the load library containing RMFPACK), RMFPACK.SMF2ZRF.XMIT (the JAVA class file) and RMFPACK.JCL.XMIT (the JCL) to the mainframe using a binary file transfer (FTP or IND$FILE). The files should be allocated with RECFM=F and LRECL=80 on the mainframe, and with sufficient space (CYLINDER allocation units, 10 cylinders primary space). Be sure to specify the bin80 transfer type in each case. For Host File, specify ‘userid.RMFPACK.LOAD.XMIT’, ‘userid.RMFPACK.SMF2ZRF.XMIT’ and ‘userid.RMFPACK.JCL.XMIT’ respectively (including the quotes), where you replace userid with your actual userid.

You will now have three files on the mainframe in what is called ‘transmit format’. In order to ‘unpack’ these files, you will ‘receive’ them. This is done with the receive command on ISPF panel 6 as RECEIVE INDA(RMFPACK.JCL.XMIT). The receive command will create a library RMFPACK.JCL under your userid.

| Note: that the word ‘receive’ here does not refer to a transmission from one system to another, but to a file conversion from the xmit ‘transmitted format’ to a regular mainframe file. Repeat this with RMFPACK.LOAD.XMIT for the RMFPACK program. RECEIVE INDA(RMFPACK.LOAD.XMIT). And RMFPACK.SMF2ZRF.XMIT for the SMF2ZRF JAVA class which will create a sequential file. RECEIVE INDA(RMFPACK.SMF2ZRF.XMIT) . Additionally, customize SMF2ZRF and run the SMF2ZRF job in the JCL library to copy the SMF2ZRF jar file to a OMVS HFS directory. Note the location in HFS as this will be needed in the member ‘STDENV’ (shell script which configures environment variables for the Java JVM). |

Run RMFPACK

You will need to compress the data using the IntelliMagic provided RMFPACK program or the SMF2ZRF JAVA class. You need to compress all of the SMF/RMF/DCOLLECT/BVIR/TMC data before it is transmitted with TCP/IP otherwise TCP/IP will corrupt uncompressed data.

We provide sample JCL members for the RMFPACK and SMF2ZRF JAVA class in the RMFPACK.JCL.XMIT. See the following members:

- A3PACK provides the JCL for running RMFPACK against the records for Systems, Db2, CICS, TCP/IP, WebSphere, MQ, Disk and Tape modules

- A3PACKZ provides the JCL for running SMF2ZRF JAVA class against the records for Systems, Db2, CICS, TCP/IP, WebSphere, MQ, Disk and Tape modules

- Ensure SMF2ZRF has been run to copy the SMF2ZRF JAVA class file into the OMVS HFS to a location of your choosing

- A3PACKZ calls stored procedure JVMPRC86 which executes the JAVA launcher, find the JAVA launcher on your system and change A3PACKZ to invoke it

- Update the STDENV member as described in the member itself, including JAVA_HOME and APP_HOME CLASSPATH variables.

Following this step, depending on what you collected you may have yourname.SMF7x.ZRF, yourname.SMF30.ZRF, yourname.SMF42.ZRF, yourname.SMFOTH.ZRF, yourname.SMFTAPE.ZRF, yourname.SMF115.ZRF, yourname.SMF116.ZRF, yourname.SMF119.ZRF, yourname.SMF120.ZRF, yourname.SMF100.ZRF, yourname.SMF101.ZRF, yourname.SMF102.ZRF, yourname.SMF1101.ZRF, yourname.SMF1102.ZRF.

If you have multiple sysplexes and time zones, you should ensure you retain this separation through to the ZRF files.

FTP

At this point in the process you have extracted, sorted and used the RMFPACK utility to create several compressed files.

Now you need to use FTP to transfer these files to IntelliMagic using the FTP credentials you were provided in the IntelliMagic data collection meeting.

In order to provide the highest possible security when transferring data to IntelliMagic, we recommend the usage of a secure file transfer mechanism (FTPS or SFTP).

In order to ease the processing of the data it is important to make sure you have unique filenames. When transferring data to IntelliMagic please use filenames in the format of:

Date.HHMM.Company.Sysplex.LPAR.SMFRecord.ZRF

(where: Date = YYMMDD; HHMM = 2300 if not NRT or ENDing hour if NRT; Company (8 chars or less); Sysplex (8 chars or less); LPAR = optional LPAR name if sending by LPAR; Record (7X, 30, 42, tape, 115, 116, 119, 120, 100, 101, 102, 1101, 1102, or OTHer))

Where the symbolics (#DATE etc) are replaced by current values. A few examples of properly formatted file names are in the table below:

| Customer, Date, System and Records | File Name |

| World Wide Bank, March 9th 2020, DEVPLEX, 42 records | 200309.2300.WWB.DEVPLEX.SMF42.ZRF |

| Computer Manufacturer, May 1st 2019, DB2PLEX, 7X records | 190501.2300.COMPMAN.DB2PLEX.SMF7X.ZRF |

| Big Insurance, Janurary 2nd 2020, PRODPLEX Eastern Standard Time, EAST LPAR, 7X records | 200102.2300.BIGINS.PRODLEX.EAST.SMF7X.ZRF |

| Big Insurance, Janurary 2nd 2020, PRODPLEX Mountain Standard Time, DENV LPAR, 7X records | 200102.2300.BIGINS.PRODLEX.DENV.SMF7X.ZRF |

| Big Insurance, Janurary 2nd 2020, PRODPLEX Mountain Standard Time with no daylight savings shift, PHX LPAR, 7X records | 200102.2300.BIGINS.PRODLEX.PHX.SMF7X.ZRF |

We provide sample JCL members for the FTP in the RMFPACK.JCL.XMIT. See the following members:

- A4FTP invokes a REXX EXEC to issue the FTP commands for sending the records for Systems, Db2, CICS, TCP/IP, WebSphere, MQ, Disk and Tape modules. This REXX EXEC will retry the FTP if it fails for any reason. Will also use FTPS by uncommenting SYSFTPD and STDENV DD statements.

- A4FTPFTP provides the JCL for FTPing the records for Systems, Db2, CICS, TCP/IP, WebSphere, MQ, Disk and Tape modules. Use this FTP job if first sending to a local FTP server.

- A4FTPS uses FTPS to transfer files. It is similar to A4FTP but is a secure method of transferring data.

- A4SFTP uses SFTP to transfer files.

Transferring the data to IntelliMagic requires the ability to send the data from your z/OS environment to our public FTPS/SFTP server. There are two mechanisms for transferring the data directly from your z/OS environment to our FTPS/SFTP server. The preferred method is the secure method. This will be referred to as the z/OS Secure Option and uses strong encryption facilitated by Public key certificate installation on z/OS. Details on how to configure this are available in Appendix F: z/OS Secure Option.

If security requirements within your environment preclude you from directly using FTP from your z/OS environment to our server, then you will need to investigate the Proxy FTP option below or contact your network security team to identify the appropriate way to transfer large files from your environment to our FTP/SFTP server. For an example of the Proxy approach please see Appendix G: Proxy FTP.

| Note: In order to provide the highest possible security when transferring data to IntelliMagic, we recommend the usage of a secure file transfer mechanism (FTPS or SFTP). The userid to be used to FTP to the IntelliMagic FTP server and the password will be provided by contacting your account manager or technical focal in a customer account package. The IntelliMagic FTP server allows for both secure and non-secure FTP connections. If you have any questions about the process you can contact support. |

Firewall Considerations

It may be necessary to open a port or a range of ports to transfer data to IntelliMagic’s FTP Server(s). This is also dependant on the method of transfer. If you are unable to initiate a successful transfer, you will want to verify that the following IP addresses and ports are whitelisted for the method of transfer you are using:

| IP Addresses |

| 35.193.242.36 |

| 35.188.91.40 |

| 35.224.88.157 |

| Port Range | Protocol |

| 21 | FTP or FTP(S) |

| 22 | SFTP |

| 28000-28500 | FTP passive range* |

| Note: The FTP passive range is required for all FTP transfers. |

-

On Windows / MacOS / Linux

Step 1: Extract the root certificate by uncompressing the zip files that were supplied by IntelliMagic.

The following file is available after extraction:

- GoDaddy_bundle-g2-g1.crt (root certificate)

Step 2: Transfer the certificate to z/OS as ASCII files into z/OS data sets with the following DCB attributes:

- Record forma: VB

- Logical record length: 256

- Block size: 27998

Step 3: FTP the certificates to z/OS.

You can use the following sample FTP input to transfer the certificate.

ASCII

locsite cyl pri=1 sec=1

locsite recfm=VB lrecl=256 blksize=27998

get GoDaddy_bundle-g2-g1.crt ‘SHARE21.IM.CERT.GODADDY’Note: You should transfer the certificate as ASCII. -

On z/OS

Step 4: You will need to have sufficient RACF (or other SAF system) authority to add digital certificates to your installation.

The following steps have sample JCL based on the certificate names (i.e. Data Sets) used in the previous steps.

Allow certificate processing for user <USER-ID>. Substitute your TSO User-ID in this sample.

//*

//* Allow certificate processing for user

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

PERMIT IRR.DIGTCERT.ADD CLASS(FACILITY) ID(<USER-ID>) ACCESS(READ)

PERMIT IRR.DIGTCERT.ADDRING CLASS(FACILITY) ID(<USER-ID>) ACCESS(READ)

PERMIT IRR.DIGTCERT.ALTER CLASS(FACILITY) ID(<USER-ID>) ACCESS(READ)

PERMIT IRR.DIGTCERT.CONNECT CLASS(FACILITY) ID(<USER-ID>) ACCESS(UPDATE)

PERMIT IRR.DIGTCERT.LIST CLASS(FACILITY) ID(<USER-ID>) ACCESS(READ)

PERMIT IRR.DIGTCERT.LISTRING CLASS(FACILITY) ID(<USER-ID>) ACCESS(READ)SETROPTS REFRESH RACLIST(FACILITY) GENERIC(FACILITY)

/*

//Note: Verify with your SAF (i.e. RACF) administrator if the FACILITY class is being RACLISTed.

Step 5: You can use the following sample JCL if the IRR.DIGTCERT class is not yet defined in your system.

//*

//* Define IRR FACILITY class

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RDEFINE FACILITY IRR.DIGTCERT.ADD UACC(NONE)

RDEFINE FACILITY IRR.DIGTCERT.ADDRING UACC(NONE)

RDEFINE FACILITY IRR.DIGTCERT.ALTER UACC(NONE)

RDEFINE FACILITY IRR.DIGTCERT.CONNECT UACC(NONE)

RDEFINE FACILITY IRR.DIGTCERT.LIST UACC(NONE)

RDEFINE FACILITY IRR.DIGTCERT.LISTRING UACC(NONE)SETROPTS REFRESH RACLIST(FACILITY) GENERIC(FACILITY)

/*

//Step 6: Create a key ring for the IntelliMagic certificate.

//*

//* Create Key ring

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT ID(<USER-ID>) ADDRING(IMCERT)

/*

//Step 7: Add IntelliMagic certificate and associate it with a specific user.

//*

//* Add IM certificate to RACF

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT ID(<USER-ID>) ADD(‘SHARE21.IM.CERT’) +

WITHLABEL(‘IntelliMagic Certificate’) +

TRUST

SETROPTS REFRESH RACLIST(DIGTCERT)

/*

//Step 8: Connect the certificate to the IntelliMagic keyring.

//*

//* Connect certificate to keyring

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT ID(<USER-ID>) CONNECT(LABEL(‘IntelliMagic Certificate’) +

RING(IMCERT) USAGE(CERTAUTH))

SETROPTS REFRESH RACLIST(DIGTCERT)

/*

//Step 9: Optional, you may need to allow the specific user to be able to use ICSF services.

//*

//* Allow user to CSFIQA

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

PERMIT CSFIQA CLASS(CSFSERV) ID(<USER-ID>) ACCESS(READ)SETROPTS REFRESH RACLIST(CSFSERV)

/*

//Step 10: Add the GoDaddy root certificate.

//*

//* Add root certificate to RACF

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT CERTAUTH +

ADD(‘SHARE21.IM.CERT.GODADDY’) +

WITHLABEL(‘IntelliMagic Root Certificate’) +

HIGHTRUST

SETROPTS REFRESH RACLIST(DIGTCERT)

/*

//Step 11: Connect the GoDaddy root certificate to the same key ring.

//*

//* Connect root certificate to keyring

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT ID(<USER-ID>) CONNECT( +

CERTAUTH +

LABEL(‘IntelliMagic Root Certificate’) +

RING(IMCERT) USAGE(CERTAUTH))

SETROPTS REFRESH RACLIST(DIGTCERT)

/*

// -

Verify Secure FTP Transfer

Sample FTP JCL

Use this sample JCL to verify that secured FTP has been successfully implemented.

Substitute ftp address above for ftp-us.intellimagic.com

Substitute your IntelliMagic FTP User-ID for <Your_FTP_User-ID>.

Substitute your FTP password for <Your Password>.Note: A sample ring name (IMCERT) is being used in this FTP JCL. Data set <USER-ID>.SMF.ZRF is being used as a sample data set. Please specify a valid data set to test FTP transmission.

Figure 1: FTPS from z/OS //*

//* RMFPACK.JCL(A4FTPS) – FTP TLS secure transfer

//*

//FTP EXEC PGM=FTP,

// PARM=(‘POSIX(ON) ALL31(ON)’,

// ‘ENVAR(“_CEE_ENVFILE_S=DD:STDENV”)/’)

//SYSUDUMP DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//OUTPUT DD SYSOUT=*

//SYSFTPD DD DISP=SHR,DISP=&PRODUCT..JCL(SYSFTPD)

//STDENV DD DISP=SHR,DISP=&PRODUCT..JCL(FTPENV)

//ZRFDATA DD DISP=SHR,DSN=<USER-ID>.SMF.ZRF

//INPUT DD *

ftp-us.intellimagic.com

<Your_FTP_User-ID>

<Your Password>

locsite fwfriendly

bin

cd upload

put //DD:ZRFDATA yymmdd.hhmm.customer.sysplex.smf7x.zrf

quit

//*RMFPACK.JCL(SYSFTPD) contains the following:

SECURE_MECHANISM TLS

CLIENTEXIT TRUE

FWFRIENDLY TRUE

TLSRFCLEVEL CCCNONOTIFY

TLSMECHANISM FTP

SECURE_FTP REQUIRED

SECURE_CTRLCONN PRIVATE ; minimum level

SECURE_DATACONN PRIVATE ; Payload must be encrypted.

KEYRING <USER-ID>/IMCERT

EPSV4 FALSE

PASSIVEIGNOREADDR FALSERMFPACK.JCL(FTPENV) contains the following:

GSK_PROTOCOL_TLSV1_2=ONSuccessful Transmission:

When you complete a successful transmission you will see the following type of messages:

EZA1450I IBM FTP CS V2R2

EZA1554I Connecting to: port: 21.

220-This system is operated by IntelliMagic.

220-Authorization from IntelliMagic staff is required to use this system. Use by unauthorized persons is strictly prohibited.

220 CrushFTP Server Ready

EZA1701I >>> AUTH TLS

234 Changing to secure mode…

EZA2895I Authentication negotiation succeeded

.

200 PORT command OK. Using secure data connection.

EZA2906I Data connection protection is privateUnsuccessful Transmission:

When the Secure FTP transmission fails you will see something like:

EZA1450I IBM FTP CS V2R2

EZA1456I Connect to ?

EZA1736I sftp.intellimagic.com

EZA1554I Connecting to: port: 21.

220-This system is operated by IntelliMagic.

220-Authorization from IntelliMagic staff is required to use this system. Use by unauthorized persons is strictly prohibited.

220 CrushFTP Server Ready

EZA2897I Authentication negotiation failed

EZA2898I Unable to successfully negotiate required authentication

EZA1701I >>> QUIT

Appendix

Appendices: Links to each Appendix

Appendix A: Module Definitions

Appendix B: Gathering BVIR data – IBM TS7700

Appendix C: Gathering DCOLLECT data – DISK Component

Appendix D: GEOXPARM

Appendix E: Gathering Additional Tape Management Configuration Data

Appendix F: z/OS Secure Option

Appendix G: Proxy FTP

Appendix H: Near Real Time (NRT) considerations

Appendix I: Help getting dates added to the FTP files

Appendix J: Gathering the CICS Data Dictionary and Statistics

Appendix K: SMF Intervals

Appendix L: Gathering Cache and ESS Statistics

Appendix M: Configuring TCP/IP for SMF 119.12 zERT Records

Appendix N: Configuring FTPS client for AT-TLS

Appendix O: Cloud Database Retention Settings

Appendix P: Configuring CICS and TCP/IP zERT Groups

Appendix

Appendix A: Module Definitions

The purpose of this appendix is to define the modules that are offered. IntelliMagic recommends that SMF record types for all modules be collected and transmitted so that they can be analyzed. The data that is required for each module can be found in Identify Required Data.

z/OS Systems Module: The purpose of this module is to review the z/OS systems ecosystem including WLM, Coupling Facility, XCF, Physical and Virtual Storage to determine if there are any availability concerns.

Db2 Module: The purpose of this module is to review the Db2 subsystems and enables performance analysts to more effectively and efficiently manage and optimize their z/OS Db2 environment.

CICS Module: The purpose of this module is to review the CICS regions and enables performance analysts to more effectively and efficiently manage and optimize their z/OS CICS transactions.

MQ Module: The purpose of this module is to review MQ enterprise messaging and provide the kind of automated intelligence needed to effectively manage today’s far-reaching MQ environments.

WebSphere Module: The purpose of this module is to review the WebSphere Application Server for z/OS transaction logging records (SMF 120 subtype 9), enabling you to track transaction response time and resource consumption.

MLC Assessment: The MLC assessment portion of the z/OS Systems module attempts to determine if IBM Monthly License Charge (MLC) software costs can be reduced through various optimizations.

TCP/IP & zERT Module: The zERT portion of the z/OS Systems module aids with the difficult task of z/OS security policy compliance.

z/OS Disk & Replication Module: The DISK module reviews the I/O ecosystem including the I/O subsystem, storage system(s), replication and FICON director components and determine if there are any performance, capacity or availability concerns.

z/OS TAPE Module: The z/OS Tape module reviews the Tape ecosystem including the logical devices, virtual tape system, physical tape system and the virtual tape inventory through the Tape Management Catalog in order to identify performance, capacity and availability concerns.

z/OS Connect Module: The z/OS Connect module enables performance analysts to manage and optimize their mainframe API environment, and to profile the requests coming into your system for essential management reporting and resource planning.

Appendix B: Gathering BVIR data – IBM TS7700

BVIR data provides information about how the TS7700 is operating. This provides insight into the TS7700 front end, cache, replication and backend processing. The IBM Tape Tools at ftp://ftp.software.ibm.com/storage/tapetool provides the BVIRHSTV utility to extract the BVIR history data in a variable record format. This data will be initially written to tape and copied to disk.

If you’re unfamiliar with TAPETOOLS, start by reviewing the IBMTOOLS.TXT file.

You must use either RMFPack or SMF2ZRF to compress the BVIR data, similar to packing the SMF/RMF data. Use the BVIR History data from the TS770 as input and create a .ZRB dataset as output.

Appendix C: Gathering DCOLLECT data – DISK Component

Gathering DCOLLECT data – Applicable if you want Disk Volume allocation information included.

IntelliMagic Vision can take advantage of additional sources of information in order to complete the picture of your storage environment. By providing DCOLLECT information, IntelliMagic Vision can provide Allocated and Free Space at a Volume, Storage Group and DSS level. DCOLLECT data is provided by execution of the IBM utility IDCAMS with the parameters “DCOLLECT OUTFILE(OUTFILE) NOD VOLUMES(*)”.

You should use either RMFPack or SMF2ZRF to compress the DCOLLECT data, similar to packing the SMF/RMF data. Use the output of the DCOLLECT data as input and create a .ZRD dataset as output.

Appendix D: GEOXPARM

In order for IntelliMagic Vision to create the SDM reports, it is necessary to provide the GDPS GEOXPARM file that defines the XRC configuration. This includes the mapping of the XRC Primary to Secondary volumes, defines the utility and journal volumes and defines which SDMs will manage these volumes.

It is important to understand that GEOXPARM is referencing devices at both the Primary and Secondary (SDM) sites. Depending on whether device addresses are unique across and within these sites determines whether you must provide additional information in order to allow IntelliMagic Vision to report on these devices in the correct context.

Appendix E: Gathering Additional Tape Management Configuration Data

Gathering Tape Management Catalog data – Applicable if you want to profile your tape library volumes.

IntelliMagic Vision can take advantage of additional sources of information in order to complete the picture of your storage environment. If your environment uses a Tape Management System; CA1, TLMS, RMM, Zara, or Control-T, then this TMS information can be useful to report the tape inventory. The IBM Tape Tools at ftp://ftp.software.ibm.com/storage/tapetool provides the FORMCATS utility to extract the TMS data into a Common format. To provide complete information, please provide information from each TMSPLEX (if multiple tape catalogs are used). Catalog VOLCATs are not used as input to IntelliMagic Vision for z/OS Tape.

You must use either RMFPack or SMF2ZRF to compress the TMS data, similar to packing the SMF/RMF data. Use the TMS data in common form as input and create a ‘.ZRT’ dataset as output.

Gathering additional MVC VSM data – the optional .XML data

IntelliMagic Vision can take advantage of additional sources of information in order to improve the reporting of MVC data from an Oracle VSM environment. We will accept the MVCRPT output as data. The MVCRPT command is documented in the Storagetek ELS Programming Reference and the command should be executed on the same system that generates the HSC User Records. The generated .XML file may be processed on zOS in an execution of IntelliMagic vision tape Analysis or transferred to windows as a text file and included in the Tape Analyze execution on that platform. There is no need to RMFPACK this data prior to transmission.

Appendix F: z/OS Secure Option

This section describes the process to set up FTPS (FTP using SSL/TLS) on z/OS.

See Appendix N: Configuring FTPS client for AT-TLS for configuring AT-TLS.

| Note: SFTP is a different secured FTP that is implemented on z/OS by installing a SSH client (e.g. OpenSSH). This will not be described in this section. |

FTPS Requirements:

Obtain the GoDaddy intermediate certificate (GoDaddy_bundle-g2-g1.zip) from IntelliMagic. This will be provided to you in your customer package email sent from IntelliMagic.

Only the GoDaddy Root Certificate, gd_bundle-g2-g1.crt, is needed to upload data securely to our ftp sites.

Installation of GoDaddy Certificate

After uploading the certificate to z/OS run these steps to install the certificate required for FTPS into RACF.

//*Step 1: Add the GoDaddy root certificate.

//*

//* Add root certificate to RACF

//*

//STEP1 EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT CERTAUTH +

ADD(‘RMFPACK.IM.CERT.GODADDY’) +

WITHLABEL(‘IntelliMagic Root Certificate’) +

HIGHTRUST

SETROPTS REFRESH RACLIST(DIGTCERT)

/*

//*Step 2: Connect the GoDaddy root certificate to the same key ring.

//*

//* Connect root certificate to keyring

//*

//STEP2 EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RACDCERT ID(IMUSMS1) CONNECT( +

CERTAUTH +

LABEL(‘IntelliMagic Root Certificate’) +

RING(IMCERT) USAGE(CERTAUTH))

SETROPTS REFRESH RACLIST(DIGTCERT)

/*

These are the Ciphers available for the IntelliMagic FTPS server

While some less Ciphers are allowed, Secure Ciphers are preferred, these are the Ciphers which IntelliMagic FTP server supports:

| Less Secure Ciphers | Secure Ciphers |

| TLS_DHE_RSA_WITH_AES_128_CBC_SHA | TLS_ECDH_RSA_WITH_AES_128_CBC_SHA256 |

| TLS_DHE_RSA_WITH_AES_128_GCM_SHA256 | TLS_ECDH_RSA_WITH_AES_128_CBC_SHA |

| TLS_DHE_RSA_WITH_AES_128_CBC_SHA256 | TLS_ECDH_ECDSA_WITH_AES_128_GCM_SHA256 |

| TLS_DHE_DSS_WITH_AES_128_GCM_SHA256 | TLS_ECDH_ECDSA_WITH_AES_128_CBC_SHA256 |

| TLS_DHE_DSS_WITH_AES_128_CBC_SHA256 | TLS_ECDH_ECDSA_WITH_AES_128_CBC_SHA |

| TLS_DHE_DSS_WITH_AES_128_CBC_SHA | TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 |

| TLS_DHE_RSA_WITH_AES_256_GCM_SHA384 | TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256 |

| TLS_DHE_RSA_WITH_AES_256_CBC_SHA256 | TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA |

| TLS_DHE_RSA_WITH_AES_256_CBC_SHA | TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256 |

| TLS_DHE_DSS_WITH_AES_256_GCM_SHA384 | TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256 |

| TLS_DHE_DSS_WITH_AES_256_CBC_SHA256 | TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA |

| TLS_DHE_DSS_WITH_AES_256_CBC_SHA | TLS_ECDH_RSA_WITH_AES_256_GCM_SHA384 |

| TLS_RSA_WITH_AES_128_CBC_SHA | TLS_ECDH_RSA_WITH_AES_256_CBC_SHA384 |

| TLS_RSA_WITH_AES_128_GCM_SHA256 | TLS_ECDH_RSA_WITH_AES_256_CBC_SHA |

| TLS_RSA_WITH_AES_128_CBC_SHA256 | TLS_ECDH_ECDSA_WITH_AES_256_GCM_SHA384 |

| TLS_RSA_WITH_AES_256_GCM_SHA384 | TLS_ECDH_ECDSA_WITH_AES_256_CBC_SHA384 |

| TLS_RSA_WITH_AES_256_CBC_SHA256 | TLS_ECDH_ECDSA_WITH_AES_256_CBC_SHA |

| TLS_RSA_WITH_AES_256_CBC_SHA | TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 |

| TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA384 | |

| TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA | |

| TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384 | |

| TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA384 | |

| TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA |

Appendix G: Proxy FTP

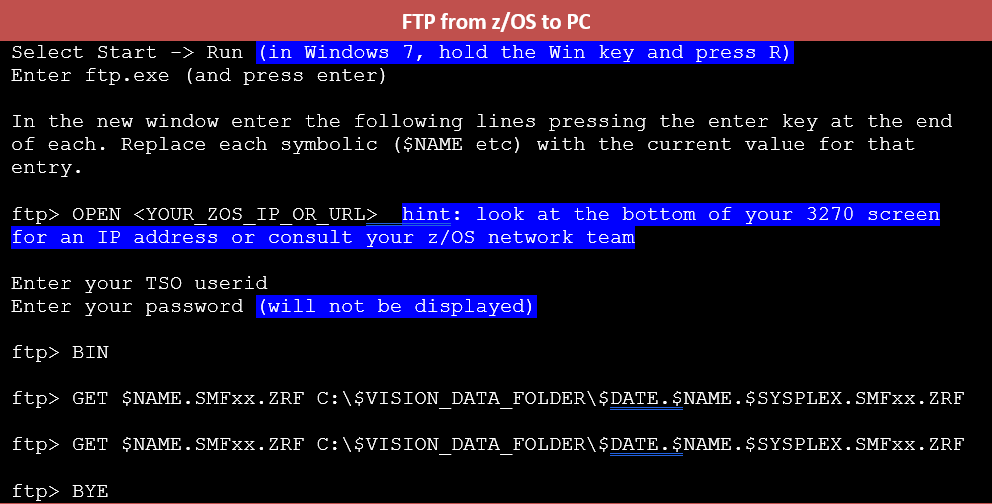

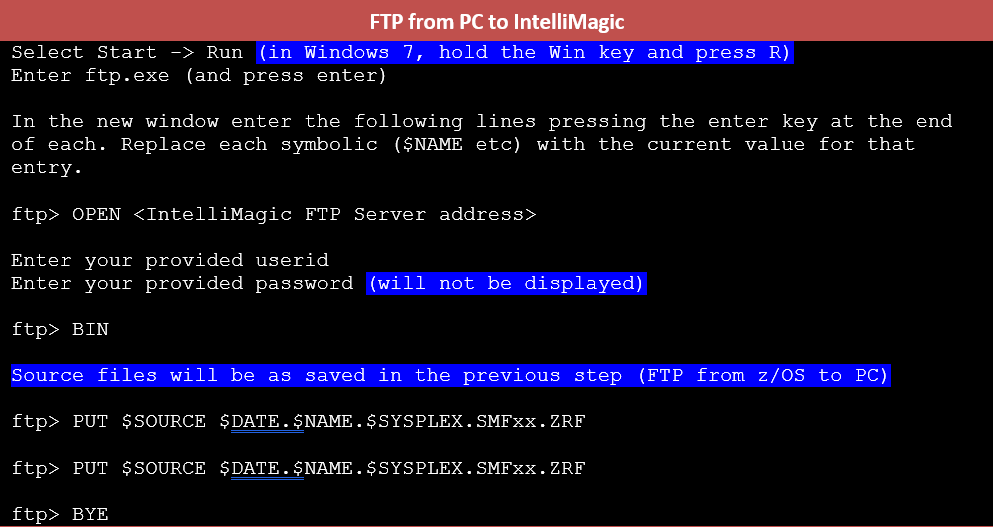

This option is required for environments that do not allow direct transfer from z/OS to an external FTP/SFTP site. You will download the data to a system and then use that system to FTP the data to IntelliMagic

In any case, it is critical to set the data transfer method to BINARY (BIN, per step 6 below) rather than the common default of TEXT/ASCII

The basic method is via MS command shell as follows:

-

1. Transfer Data from z/OS to PC

-

2. Transfer Data from PC to IntelliMagic

You can also use SFTP using an SFTP client or simply by pointing your browser to the supplied FTP server.

Appendix H: Near Real Time (NRT) considerations

NRT as a process is largely unchanged from what customers were doing with their daily loads. We will need SMF/BVIR data from all LPARs in the reporting sysplexes and this process can start with the ‘I SMF’ command. It’s the same process of:

We are simply performing database load more frequently than once per day. If IntelliMagic is also processing TMC data, this data still needs to be sent no more frequently than once per day. For DCOLLECT data, the data needs to be sent along with the other SMF data at the same intervals. The same daily DCOLLECT file can be sent along with the SMF data for each interval, so that the collection processing is still only run once a day. There are other considerations for NRT if you are processing this data on premise. The intent of this document is to address data collection for offsite processing (in the cloud), so considerations for consolidation and reorg are not discussed here. For these topics, refer to the IntelliMagic Vision for zOS Reference Guide.

One additional concern is adding a timestamp to the filenames sent to IntelliMagic. Our preference is that we need the ending interval as a separate qualifier following date. You are sending to a non-z/OS FTP server, so the qualifier starting with an alpha is optional (as long as the position is fixed).

| Customer, Date, System and Records | File Name |

| World Wide Bank, March 9th 2020, DEVPLEX, 42 records | 200309.0300.WWB.DEVPLEX.SMF42.ZRF |

| Computer Manufacturer, May 1st 2019, DB2PLEX, 7X rec | 190501.0300.COMPMAN.DB2PLEX.SMF7X.ZRF |

| Big Insurance, January 2nd 2020, PRODPLEX Eastern Standard Time, EAST LPAR, 7X records | 200102.2300.BIGINS.PRODPLEX.EAST.SMF7X.ZRF |

| Big Insurance, January 2nd 2020, PRODPLEX Mountain Standard Time, DENV LPAR, 7X records | 200102.2300.BIGINS.PRODPLEX.DENV.7X.ZRF |

| Big Insurance, January 2nd 2020, PRODPLEX Mountain Standard Time with no daylight savings shift, PHX LPAR, 7X records | 200102.2300.BIGINS.PRODPLEX.PHX.SMF7X.ZRF |

| Note: If sending as NRT, all files must include a timestamp (ex. ‘190102.2300’), even if it is daily data. |

Appendix I: Help getting dates added to the FTP files

Here’s an example to add the current date to files to be sent to IntelliMagic. This can be added to a defined process that is automated. It consists of a REXX EXEC executed by IKJEFT01, FTP sample JCL and an executable JCL. The executable JCL runs the REXX EXEC which reads, modifies and submits the FTP JCL. The sample FTP JCL sends the data files which have been previously compressed using RMFPACK. This FTP, if sending data directly to IntelliMagic will be sending to a non-zOS FTP Server. Qualifiers used in the DSN in this case do not need to start with an ALPHA or be limited to 8 characters in length (and FTP doesn’t care).

You can download/view the REXX EXEC sample below here.

-

Rexx EXEC

/* REXX */

/* TRACE ?R */

ADDRESS TSO

/*******************************************************************/

/* THIS REXX EXEC IS TO PROVIDE A GREGORIAN DATE(YYYYMMDD.HH00.HH00)*/

/* AS A PREFIX TO THE FILE DESTINATION OF AN FTP. IT WILL USE */

/* TODAY’S DATE UNLESS PROVIDED AN ALTERNATIVE AND SUBMIT THE FTP */

/* JOB FOR EXECUTION. */

/* THE FTP JOB HAS BEEN CHANGED TO SPECIFY #DATE IN PLACE OF */

/* PLACE OF SUBSTITUTION. */

/* …DAVE HEGGEN INTELLIMAGIC INC. */

/*******************************************************************/

ARG DATE

IF DATE = ” THEN

DO

DATE = DATE(‘S’)

YEAR = SUBSTR(DATE,1,4)

MONTH = SUBSTR(DATE,5,2)

DAY = SUBSTR(DATE,7,2)

DATE = MONTH DAY YEAR

END

ABORT = ‘NO’

PARSE VAR DATE MONTH DAY YEAR .

IF DAY > ’31’ THEN

do

ABORT = ‘YES’

SAY ‘DAY OUT OF RANGE’

end

IF DAY < ’00’ THEN

do

ABORT = ‘YES’

SAY ‘DAY OUT OF RANGE’

end

IF MONTH > ’13’ THEN

do

ABORT = ‘YES’

SAY ‘MONTH OUT OF RANGE’

end

IF MONTH < ’00’ THEN

do

ABORT = ‘YES’

SAY ‘MONTH OUT OF RANGE’

end

IF YEAR > ‘2100’ THEN

do

ABORT = ‘YES’

SAY ‘YEAR OUT OF RANGE’

end

IF YEAR < ‘2000’ THEN

do

ABORT = ‘YES’

SAY ‘YEAR OUT OF RANGE’

end

IF ABORT = ‘YES’ THEN

DO

SAY ‘PLEASE SPECIFY DATE AS MM DD YYYY INSTEAD OF’ DATE

EXIT(12)

END

hour = TIME(‘H’)

shour = hour – 1 /* STARTING HOUR OF DATA TO SEND */

DATE = YEAR || MONTH || DAY || ‘.’ || shour || ’00.’ || hour || ’00’

FOUND = ‘N’ /* RECORD OF INTEREST */

I = 0

“EXECIO 0 DISKW SUBTEMP”

“EXECIO 1 DISKR JCLR”

DO WHILE (RC = 0) /* NOT EOF */

PARSE PULL RRECORD

PARSE VAR RRECORD FIRST .

SELECT

WHEN FIRST = ‘PUT’ THEN

FOUND = ‘Y’ /* FOUND THE FTP PUT STATEMENT */

when first = ‘put’ then

FOUND = ‘Y’ /* FOUND THE FTP PUT STATEMENT */

OTHERWISE

NOP

END /*SELECT*/

IF FOUND = ‘Y’ THEN /* LOOK FOR #DATE AND SUBSTITUTE */

DO

FOUND = ‘N’ /* RECORD OF INTEREST */

LEN = LENGTH(‘#DATE’) /* STRING TO LOOK FOR */

LOC1 = POS(‘#DATE’,RRECORD,1)

LOC2 = pos(‘#date’,rrecord,1)

IF LOC1 = LOC2 THEN /* they BOTH are 0 */

FOUND = ‘Y’ /* keep looking */

else

do

location = loc1 + loc2

RRECORD = SUBSTR(RRECORD,1,LOCATION-1) || DATE ||,

SUBSTR(RRECORD,LOCATION+LEN)

end

/* SAY RRECORD */

END

PUSH RRECORD

“EXECIO 1 DISKW SUBTEMP”

“EXECIO 1 DISKR JCLR”

END

“EXECIO 0 DISKR JCLR (FINIS”

“EXECIO 0 DISKW SUBTEMP (FINIS”

X = LISTDSI(“SUBTEMP” “FILE”) /* GET THE NAME OF THE TEMP DSN */

say “SUBMIT” “‘” || SYSDSNAME || “‘”

“SUBMIT” “‘” || SYSDSNAME || “‘”

EXIT (0) -

Sample JCL - FTP template

//IMUSDH19 JOB ,IMUSDH1,NOTIFY=&SYSUID,MSGLEVEL=(1,1),

// REGION=0M,MSGCLASS=H,USER=&SYSUID

//*

//FTP EXEC PGM=FTP,PARM='(EXIT’

//SYSPRINT DD SYSOUT=*

//SYSIN DD *

FTPSERVER_ADDRESS

USER

PASSWORDGOESHERE

BIN

PUT ‘CAP.RMFMAGIC.PRDPLEX.SMFOTH.PACKED’ +

#DATE.CompanyName.PRDPLEX.SMFOTH.ZRF

PUT ‘CAP.RMFMAGIC.PRDPLEX.SMFTAPE.PACKED’ +

#DATE.CompanyName.PRDPLEX.SMFTAPE.ZRF

PUT ‘CAP.RMFMAGIC.PRDPLEX.SMF30.PACKED’ +

#DATE.CompanyName.PRDPLEX.SMF30.ZRF

PUT ‘CAP.RMFMAGIC.PRDPLEX.SMF42.PACKED’ +

#DATE.CompanyName.PRDPLEX.SMF42.ZRF

PUT ‘CAP.RMFMAGIC.PRDPLEX.SMF7X.PACKED’ +

#DATE.CompanyName.PRDPLEX.SMF7X.ZRF

PUT ‘CAP.RMFMAGIC.PRDPLEX.BVIRG1.PACKED’ +

#DATE.CompanyName.PRDPLEX.BVIRG1.ZRB

PUT ‘CAP.RMFMAGIC.PRDPLEX.BVIRG2.PACKED’ +

#DATE.CompanyName.PRDPLEX.BVIRG2.ZRB

PUT ‘CAP.RMFMAGIC.PRDPLEX.BVIRG3.PACKED’ +

#DATE.CompanyName.PRDPLEX.BVIRG3.ZRB

PUT ‘CAP.RMFMAGIC.PRDPLEX.BVIRG4.PACKED’ +

#DATE.CompanyName.PRDPLEX.BVIRG4.ZRB

QUIT -

Execution JCL – IKJEFT01 program to run the Rexx EXEC

//IMUSDH1Z JOB ,IMUSDH1,NOTIFY=&SYSUID,MSGLEVEL=(1,1),

// REGION=0M,MSGCLASS=H,USER=&SYSUID

//STEP03 EXEC PGM=IKJEFT01,

// PARM=’%SLAMDATE’

//* PARM=’%SLAMDATE 10 12 2013′

//* PARM=’%SLAMDATE 16 12 2013′ ERROR TEST

//* PARM=’%SLAMDATE 10 56 2013′ ERROR TEST

//* PARM=’%SLAMDATE 10 12 4313′ ERROR TEST

//SYSEXEC DD DSN=IMUSDH1.REXX.CLIST,DISP=SHR

//SYSPRINT DD SYSOUT=*

//SYSTSPRT DD SYSOUT=*

//JCLR DD DSN=IMUSDH1.JCL.CNTL(FTP),DISP=SHR

//SUBTEMP DD UNIT=SYSDA,SPACE=(TRK,(1,1)),

// DCB=(RECFM=FB,LRECL=80,BLKSIZE=0,DSORG=PS)

//SYSTSIN DD DUMMY

//SYSIN DD DUMMY

//

Appendix J: Gathering the CICS Data Dictionary and Statistics

The CICS data dictionary is used to customize the CICS 110.1 records (usually done to reduce the volume of data). If that customization is implemented at your site, the data dictionary record is required to process the 110 records. There are two ways to include the CICS data dictionary with your CICS 110.1 records.

1. This can be created using the IBM DFHMNDUP job. Sample JCL provided below:

//SMFMNDUP JOB (accounting information),CLASS=A,

// MSGCLASS=A

//****************************************************************

//* Step 1 – Create new dictionary record and output to SYSUT4

//****************************************************************

//MNDUP EXEC PGM=DFHMNDUP

//STEPLIB DD DSN=CICSTS54.CICS.SDFHLOAD,DISP=SHR

// DD DSN=mct.table.loadlib,DISP=SHR

//SYSUT4 DD DSN=dict.record.dsn,DISP=(NEW,CATLG),

// UNIT=SYSDA,SPACE=(TRK,(1,1))

//SYSPRINT DD SYSOUT=A

//SYSUDUMP DD SYSOUT=A

//SYSIN DD *

MCT=NO

SYSID=PRD1

GAPPLID=AOR98M12

DATE=2022158

TIME=090000

/*

//****************************************************************

//* Step 2 – Run RMFPACK on data set with dictionary record from

//* step 1 (same process as used for previous SMF data)

//****************************************************************

//****************************************************************

//* Step 3 – FTP RMFPACK output from step 2 should be named

//* DYYYYMMDD.$SYSPLEXNAME.CICS.DATA.DICTIONARY.ZRF

//* If you have multiple data dictionaries per SYSPLEX please

//* let us know.

//****************************************************************

- First line of STEPLIB concatenation: specify CICS library containing the DFHMNDUP program.

- Second line of STEPLIB concatenation: specify library from the DFHRPL concatenation that contains the modified monitoring control table (MCT) used for CICS region(s) that are related to the 110 data

- SYSUT4 = specifies a data set to hold the dictionary record. Specify the DISP parameter according to whether the data set already exists, or a new one is to be created and cataloged.

- MCT= specifies the suffix of the monitoring control table (MCT) used in the CICS run for which you are analyzing performance data. If your CICS region ran with the system initialization parameter MCT=NO (which results in a default MCT dynamically created by CICS monitoring domain) you should specify MCT=NO otherwise specify the suffix of the DFHMCT member.

- SYSID=xxxx specifies the system identifier of the MVS system that owns the SMF data sets.

- GAPPLID=name specifies the APPLID specified on either the APPLID system initialization parameter, or the generic APPLID in an XRF environment for which you are analyzing performance data.

- DATE=yyyyddd specifies the Julian date to be included in the dictionary record.

- TIME=hhmmss specifies the time in hours minutes seconds in the dictionary record.

2. Gather the CICS data from a region that was restarted

CICS region startup processing automatically writes the CICS dictionary record in the SMF recording process so if 110 records are being cut by SMF, the CICS dictionary will be written out when a CICS region is started.

A day of data collected when CICS region maintenance normally occurs is not typically of interest for performance at peak processing times; however, this collection period would include a CICS restart. It is likely the same dictionary is used for many or all regions within a sysplex, but this should be confirmed to ensure a unique dictionary is applied for the regions in scope for the SMF data collected.

Gathering the CICS Statistics

The CICS Statistics are recorded in the CICS 110.2 records. These are required statistic types that must be included in your CICS 110.2 records.

CICS STATISTICS SUMMARY

| Type | Id | Macro | Description |

| 010 | STIXMG | DFHXMGDS | Transaction manager (Globals) id |

| 011 | STIXMR | DFHXMRDS | Transaction manager (Trans) id |

| 012 | STIXMC | DFHXMCDS | Transaction manager (Tclass) id |

| 029 | STISMDSA | DFHSMSDS | Storage manager DSA id |

| 034 | STITCR | DFHA06DS | Terminal control (resid) id |

| 052 | STICONSR | DFHA14DS | ISC/IRC system entry (resid) id |

| 062 | STIUSG | DFHDSGDS | Dispatcher stats id |

| 067 | STIFCR | DFHA17DS | File Control (resid) id |

| 097 | STINQG | DFHNQGDS | Enqueue mgr stats (global) id |

| 103 | STID2R | DFHD2RDS | DB2 entry stats (resource) id |

Appendix K: SMF Intervals

Internal processing in IntelliMagic Vision is performed on a Sysplex Boundary. We want the SMF data from all LPARS in a Sysplex, and if multiple Sysplexes attach to the same hardware, then we want these Sysplexes together in the same interest group. By processing the data in this manner, an interest group will provide an accurate representation of the hardware’s perspective of activity and allow an evaluation of whether this activity is below, equal to, or above the hardware’s capability. It’s also true that the shorter the interval, the more accurate the data will be in showing peaks and lulls. The shortest interval you can define is 1 minute. This would typically be the average of 60 samples (1 cycle per second). It’s always a balancing act between the accuracy of the data and the size/cost of storing and processing the data.

In IntelliMagic Vision we also bridge across SMF record types. Accomplishing this feat requires that the SMF/RMF or SMF/CMF data sources are coordinated. There are two variables to manage this.

1) Interval Length (generally 15-30 minutes)

2) Start of the interval (often the top of the hour)

IntelliMagic Vision will throw out data from an LPAR when the data is not recorded in the same interval as all the other LPARs. To handle such variation would impact the reporting accuracy of the Sysplex or consolidated Hardware views. There are overrides available in Reduce to set the interval length, so your options are override or correct. It is possible to override/normalize to a higher interval length, but not a shorter one.

You may see message

RMF0109E 12 System D001 has an interval length of 1800 s, cannot be normalized to standard interval length of 900 seconds. Records will be dropped.

If you want to know how SMF is recorded, issue the operator command ‘D SMF’ or examine the active SMFPRMxx parmlib member. Pay attention to the settings of INTVAL and SYNCVAL parameters (specified in minutes).

RMF

If you want to know how RMF is recorded, issue the operator command ‘F RMF,D ZZ’ or examine the active ERBRMFxx Parmlib member. The Settings of SYNC and INTERVAL are of interest.

If you specify SYNC(SMF) then the INTERVAL is ignored. SMF Values for INTVAL and SYNCVAL are used.

If you specify SYNC(RMF, mm) then INTERVAL is used and recording starts at mm.

NOSYNC says INTERVAL is used (and I think the default interval start value is 00).

To have RMF use the same settings as SMF, specify ERBRMFxx value SYNC(SMF). That way, if you change the SMF settings, then RMF will follow.

CMF

Some customers use CMF from BMC to provide their RMF type support. In this situation, issue the operator command ‘F MNVZPAS,DC=STATUS’.

This will produce a series of console messages…

BBDDA101I ———- Regular Interval Information ———-

BBDDA101I Base Cycle Time (in 1/100s) ……: 100

BBDDA101I Record Interval Time (in 1/100s) .: 90000

BBDDA101I Number of Cycles/Interval ……..: 900 (100%)

BBDDA101I #of Cycles in current Interval …: 89 ( 9%)

BBDDA101I Total #of Cycles ……………..: 5620394

BBDDA101I Total #of Regular Intervals ……: 6277

Pay particular attention to the number of cycles per interval. In this case it’s 900 seconds which equates to 15 minutes. CMF parameters that control Interval length and the start of the interval are:

REPORT CPM

,INTERVAL={30|nn|HOUR|QTR|HALF|SMF}

,SMF=YES

,SYNCH={00|nn|HOUR|QTR|HALF|SMF}

To have CMF use the same settings as SMF, in CMF specify SMF=YES,SYNC=SMF. That way, if you change the SMF settings, then CMF will follow.

SMF Interval Data

The default SMF interval is 30 minutes and some customers will balk at using 15 minutes because they think it will double their SMF recording. This is likely not the case. Most SMF record types are written because an event occurred, not because a timer expired. Interval recording impacts type 30 Subtype 2 and type 42 and 7x data only. So yes, there’s an increase, but your daily SMF is not going to double. Depending on the number of records, this is a sample of the size of the SMF Interval Records.

| SMF Record Type | Average Record Size (bytes) | Min Record Size (bytes) | Max Record Size (bytes) |

| 30.2 | 2605.92 | 540 | 32730 |

| 30.3 | 1595.62 | 666 | 32730 |

| 42.5 | 32186.81 | 492 | 32748 |

| 42.6 | 675.75 | 284 | 32740 |

MQ Interval Data

By default MQ writes its accounting data once every 30 minutes (STATIME=30), and the intervals are not synchronized. We strongly recommend that you configure MQ to use SMF synchronized recording by setting STATIME=0 in the CSQ6SYSP system parameter module. The benefits are that the SMF data is synchronized between MQ subsystems, and with other SMF information about MQ, such as provided with job (30), data set (42) and RMF records. It also makes it easier for IntelliMagic Vision to process the intervals, as the native interval support in MQ tends to produce somewhat variable interval lengths.

Appendix L: Gathering Cache and ESS Statistics

The 74.5 record contains cache statistics and must be enabled. The 74.7 record contains Director Control Unit Port (CUP) statistics and must be enabled. The 74.8 record contains Array and Link statistics and must be enabled. Enablement of this data collection is different between RMF and CMF. If replication is present, port type of SYNC or ASYNC must be supplied by the customer for each DSS and distance between sites(miles/km or local)

Ideally 74.5 and 74.8 data collection need to be from only one LPAR in the SYSPLEX, but it should be from the LPAR that has connectivity to all DSSs and reporting volumes online. There’s no problem in specifying CACHE and ESS in more than one (or all) LPARs, IntelliMagic Vision throws out the duplicate records when they are encountered. Some customers prefer to specify CACHE and ESS in more than one LPAR, because not all LPARs are active in all intervals, and they want continuous recording of this information.

FICON Director/Switch statistics are stored on the switch platform itself and it does not need to be accessed by more than one LPAR out of all the LPARs that run data through the switch. Every CEC and LPAR in every Sysplex that might be attached to a switching device can retrieve the switch information using a single LPAR which can then cut the 74-7 RMF/CMF records for all CECs, LPARs and Sysplexes.

Brocade tells their students that they should enable FICON switch data collection in only one LPAR of their Sysplex. They should also make sure that FICON STATS=NO has been set in the IECIOSxx member of all LPARs except in the one LPAR where data collection is enabled in the Sysplex.

In 2007, it was warned that obtaining Director Statistics (74.7) data from multiple LPARs could result in Missing Interrupts and boxed devices. Following this guideline will mean only the selected LPAR will report switch activity/errors, even with multiple Sysplexes involved.

In cases where Remote Copy exists, it’s also useful to have a volume from the secondary DSS online to the primary host. That way we gather 74.8 information from both ends of the remote copy and can see both send and receive activity in the remote copy session(s).

Not all hardware vendors fully support the 74.8 records, but in no case is the request from RMF given an error.

To enable in either RMF or CMF follow the instructions below accordingly.

RMF

The ERBRMFxx Parmlib member needs to be updated accordingly:

74.5 cache statistics collection – specify CACHE (NOCACHE is the default)

74.7 FICON director data collection – specify FCD (the default is NOFCD – disabled).

74.8 ESS Array and Link statistics – specify ESS (NOESS is the default).

CMF

To turn on the 74.5 and 74.8 cache statistics use the CMF statement CACHE RECORDS=ALL. Specifying CACHE will collect 74.5 records and specifying ESS will collect 74.8 records.

To turn on 74.7 FICON switch data collection use the CMF statement FICONSW.

Appendix M: Configuring TCP/IP for SMF 119.12 zERT Records

The 119.12 record contains zERT statistics and must be enabled. To enable in zERT logging follow the instructions below to use the SMFCONFIG statement to provide SMF logging for TCP/IP and the GLOBALCONFIG statement to activate zERT in-memory monitoring.

Using SMFCONFIG to turn on SMF logging allows you to request that standard subtypes are assigned to the TCP/IP SMF records. The SMFPARMS statement provides a similar capability but requires the installation to select the subtype numbers to be used. Use the SMFCONFIG statement instead of SMFPARMS. See more information about accounting for SMF records in z/OS Communications Server: IP Configuration Guide. You turn the zERT discovery function on in the TCP/IP stack by specifying the GLOBALCONFIG ZERT parameter in the TCP/IP profile data set. Enabling zERT causes the TCP/IP stack to record and collect cryptographic protection information for new TCP and Enterprise Extender connections that terminate at the stack. For more information on enabling zERT, see GLOBALCONFIG statement in z/OS Communications Server: IP Configuration Reference.

The SMFCONFIG profile statement controls only whether SMF records are written to the MVS™ SMF data sets. More information about the SMFCONFIG statement is contained in this link https://www.ibm.com/support/knowledgecenter/en/SSLTBW_2.3.0/com.ibm.zos.v2r3.halz001/smfconfigstatement.htm

Syntax

Tip: Specify the parameters for these statement in any order.

SMFCONFIG TYPE119 ZERTSUMmary | NOZERTSUMmary

Parameters

ZERTSUMMARY | NOZERTSUMMARY

NOZERTSUMMARY

Requests that SMF type 119 records of subtype 12 not be created. This operand is valid if the current record type setting is TYPE119. This is the default value.

ZERTSUMMARY

Requests that SMF type 119 records of subtype 12 be created for zERT aggregation function processing. These records are created periodically based on the SMF interval value in effect. SMF event records of subtype 12 are also created when zERT aggregation is enabled, or disabled dynamically. This operand is valid if the current record type setting is TYPE119.

Rule: zERT aggregation SMF 119 records are only created when the z/OS Encryption Readiness Technology aggregation function has been activated by specifying the ZERT AGGREGATION parameter on the GLOBALCONFIG profile statement.

GLOBALCONFig ZERT NOAGGregation | AGGregation

Parameters

- ZERT | NOZERT

- Specifies whether the z/OS Encryption Readiness Technology (zERT) will monitor TCP and Enterprise Extender traffic on this TCP/IP stack.

- NOZERT

- Indicates that the TCP and Enterprise Extender traffic will not be monitored by zERT. This is the default value.

- ZERT

Indicates that TCP and Enterprise Extender traffic will be monitored by zERT. The zERT discovery function, which monitors and collects information about security sessions on a per-TCP- and per-EE connection basis, is always enabled when ZERT is specified. In addition, you can specify subparameters to enable other zERT functions.

- AGGregation

- Indicates that the zERT aggregation function is enabled. zERT aggregation uses the information collected by zERT discovery to create summarized security session information. The zERT aggregation information is reported at fixed intervals of time, as defined by the configured SMF interval value. For more details on the zERT aggregation function, see What does zERT aggregation collect? in z/OS Communications Server: IP Configuration Guide.

- NOAGGregation

- Indicates that the zERT aggregation function is disabled. This is the default.

Note that additional configuration is required to specify where zERT data is to be written. For more information of those configuration parameters, see SMFCONFIG statement and NETMONITOR statement. Separate SMFCONFIG controls exist for zERT discovery records and for zERT aggregration records.

Examples

For example, if the following is specified:

SMFCONFIG TYPE119 ZERTSUMmary

The recording is Type 119 ZERTSUMMARY client records.

GLOBALCONFIG ZERT AGGREGATION

Both the zERT discovery and aggregation functions will begin for TCP and Enterprise Extender connections.

Appendix N: Configuring FTPS client for AT-TLS

This appendix will outline the requirements for implementing FTPS on z/OS to allow the FTP transmission to use the most secure ciphers that are currently supported for this environment.

Secure File Transfer Options with FTPS

Currently on z/OS, FTP can be customized to create a secure connection (via TLS) in two ways. The TLSMECHANISM statement in FTP.DATA determines how TLS is implemented.

TLSMECHANISM FTP The secure mechanism is defined by FTP

TLSMECHANISM ATTLS The secure mechanism is defined by AT-TLS

When the secure mechanism is FTP, then the connection is currently restricted to TLS 1.2 and limited to a set of ciphers that are currently not considered secure enough.

For that reason, we recommend that the secure mechanism ATTLS be used.

See z/OS Communications Server Version 2 Release 4 New Function Summary for more information.

Steps for customizing the FTP client for AT-TLS

The following steps outline what is needed to customize FTP to use AT-TLS.

- Setup the Policy Agent (PAGENT)

- The Policy Agent contains information for the FTP Client such as available ciphers, SSL level used and certificate.

- Change TCPIP to use TTLS

- Make RACF changes to control TCPIP usage before the Policy Agent is available

- Activate the TTLS setting in TCPIP

- Modify and test the FTP JCL to IntelliMagic

Setup the Policy Agent (PAGENT)

If the Policy Agent (PAGENT) is not yet being used, it will need to be fully configured.

Perform the following steps:

- Configure the policy agent as a started task in z/OS.

- Create a PAGENT Start Task in the appropriate PROCLIB Data Set.

- A sample PAGENT started task procedure is available in TCPIP.SEZAINST(EZAPAGSP).

- Create a PAGENT Start Task in the appropriate PROCLIB Data Set.

//PAGENT PROC

//PAGENT EXEC PGM=PAGENT,REGION=0K,TIME=NOLIMIT,

// PARM=’ENVAR(“_CEE_ENVFILE_S=DD:STDENV”)/’

//*

//* Sample MVS data set containing environment variables:

//*

//STDENV DD DISP=SHR,DSN=USER.TCPPARMS(PAENV)

//SYSPRINT DD SYSOUT=*

//SYSOUT DD SYSOUT=*

//*

//CEEDUMP DD SYSOUT=*,DCB=(RECFM=FB,LRECL=132,BLKSIZE=132)

- Modify the STDENV DD statement to point to a member that will contain the environment variables

- In the example STDENV member (PAENV), modify the environmental Variable PAGENT_CONFIG_FILE to point to the configuration member.

TZ=PST8PDT7

PAGENT_CONFIG_FILE=//’USER.TCPPARMS(SPACFG)’

PAGENT_LOG_FILE=SYSLOGD

- In the configuration member (SPACFG), point to the AT-TLS Policy member

TCPIMAGE TCPIP FLUSH PURGE

TTLSConfig //’USER.TCPPARMS(TCPPOLS)’ FLUSH PURGE

- Define the security authorization for the policy agent.

- Make RACF changes to define the PAGENT Started Task

//*

//* Define PAGENT to RACF

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RDEFINE STARTED PAGENT.*

SETROPTS RACLIST(STARTED) REFRESH

/*

- Add PAGENT user to RACF

//*

//* Add PAGENT user to RACF

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

ADDUSER PAGENT DFLTGRP(OMVSGRP) OMVS(UID(0) SHARED HOME(‘/’))

/*

- Associate the PAGENT User ID to the PAGENT Started Task

//*

//* Add User PAGENT to STARTED

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RALTER STARTED PAGENT.* STDATA(USER(PAGENT))

SETROPTS RACLIST(STARTED) REFRESH

/*

- Restrict management of the PAGENT Started Task

//*

//* Restrict access to the PAGENT STC

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RDEFINE OPERCMDS (MVS.SERVMGR.PAGENT) UACC(NONE)

PERMIT MVS.SERVMGR.PAGENT CLASS(OPERCMDS) ACCESS(CONTROL) –

ID(PAGENT)

SETROPTS RACLIST(OPERCMDS) REFRESH

/*

- Define the policy agent configuration files and AT-TLS rules.

- Using our previous Policy Agent example, the configuration is placed in member TCPPOLS in data set USER.TCPPARMS.

- See Sample policy agent” for a sample policy agent configuration file.

- Create and configure digital certificate in RACF.

- See Appendix F in IntelliMagic Vision for z/OS Data Collection Instructions for detailed JCL to create and configure the required certificate.

- Note: Only the Godaddy Root Certificate is required to connect to the IntelliMagic FTP secure FTP server.

Change TCPIP to use TTLS

Add TTLS to the TCPCONFIG statement in the TCPIP Profile configuration member.

For example:

TCPCONFIG TCPSENDBFRSIZE 32K TCPRCVBUFRSIZE 32K SENDGARBAGE FALSE

RESTRICTLOWPORTS TTLS

Make RACF changes to control TCPIP usage before the Policy Agent is available

Allow users to access the TCPIP stack before the Policy Agent is available.

Note: This example allows all users access to the TCPIP stack. Make appropriate changes if you only want to give certain users access.

//*

//* Allow users access to TPCIP stack before PAGENT is started

//*

//TSO EXEC PGM=IKJEFT1A

//SYSTSPRT DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//SYSTSIN DD *

RDEFINE SERVAUTH (EZB.INITSTACK.*) UACC(READ)

PERMIT EZB.INITSTACK.* CLASS(SERVAUTH) ACCESS(CONTROL) –

ID(PAGENT)

SETROPTS RACLIST(SERVAUTH) REFRESH

/*

Activate TTLS setting in TCPIP

In order to activate the TTLS setting, either recycle the TCPIP address space (note: TCPIP connections will be disrupted), or modify the TCPIP address space by using an Obeyfile entry to add the TTLS support.

Obeyfile example:

In USER.TCPPARMS(TTLSON)

TCPCONFIG TTLS

Execute the following z/OS console command:

Vary TCPIP,,O,DSN=USER.TCPPARMS(TTLSON)

Modify and test the FTP JCL

Sample FTP JCL using AT-TLS.

Note: Update the FTP site address, the <User_ID> and <Password> statements.

//*

//* TEST FTP AT-TLS ACCESS

//*

//TEMPFTPD EXEC PGM=IEBGENER

//SYSUT1 DD *

DEBUG SEC

SECURE_MECHANISM TLS

TLSRFCLEVEL CCCNONOTIFY

TLSMECHANISM ATTLS

SECURE_FTP REQUIRED

SECURE_CTRLCONN PRIVATE

SECURE_DATACONN PRIVATE ; Payload must be encrypted.

EPSV4 TRUE

/*

//*

//SYSUT2 DD DISP=(NEW,PASS),DSN=&FTPDATA,UNIT=3390,

// SPACE=(CYL,(1,1)),RECFM=FB,LRECL=80,BLKSIZE=0

//SYSPRINT DD SYSOUT=*

//SYSIN DD DUMMY

//*

//* FTP DATA

//*

//FTP EXEC PGM=FTP,

// PARM=(‘POSIX(ON) ALL31(ON)’,

// ‘/(TIMEOUT 60 EXIT=08’)

//SYSUDUMP DD SYSOUT=*

//SYSPRINT DD SYSOUT=*

//OUTPUT DD SYSOUT=*

//SYSFTPD DD DISP=(OLD,DELETE),DSN=&FTPDATA

ftp-us.intellimagic.com

<User_ID>

<Password>

ls

cwd upload

ls

QUIT

Sample policy agent configuration file (note: some parameters are only valid for z/OS 2.4 or higher)..

# Common FTP Group that all Rules use

TTLSGroupAction grp_FTP

{

TTLSEnabled On

Trace 2 # Log Errors to syslogd

}

# Common Environment that most servers could use

TTLSEnvironmentAction Generic_Server_Env

{

HandshakeRole Server

TTLSKeyRingParms

{

Keyring Server_Ring

}

}

###################################################################

# #

# FTP Specific Rules and Actions #

# #

###################################################################

# FTP data connections must use SecondaryMap

# to access keyring and certificate under server’s security context.

# Do not define separate rules for FTP data connections.

TTLSRule Secure_Ftp_client

{

RemotePortRange 21

Direction Outbound

TTLSGroupActionRef grp_FTP

TTLSEnvironmentActionRef Secure_Ftp_Client_Env

}

TTLSRule Secure_Ftpd

{

LocalPortRange 21

Direction Inbound

TTLSGroupActionRef grp_FTP

TTLSEnvironmentActionRef Secure_Ftpd_Env

}

# Environment Shared by all secure FTP client connections.

# each client must own their own key ring named CLIENT/RING

TTLSEnvironmentAction Secure_Ftp_Client_Env

{

HandshakeRole Client

TTLSKeyRingParms

{

Keyring CLIENT/IMCERT

}

TTLSCipherParmsRef RequireEncryption

TTLSEnvironmentAdvancedParms

{

ApplicationControlled On

SecondaryMap On

SSLV2 On # Allow SSLv2 connections

SSLV3 On # Allow SSLv3 connections

TLSV1.2 On # Allow TLSv1.2 connections

TLSV1.3 On # Allow TLSv1.3 connections

}

}

# Environment Shared by all secure FTP server connections.

TTLSEnvironmentAction Secure_Ftpd_Env

{

HandshakeRole Server

TTLSKeyRingParms

{

Keyring FTPDsafkeyring # The keyring must be owned by the FTP server

}

TTLSEnvironmentAdvancedParms

{

ApplicationControlled On

SecondaryMap On # include data connections

SSLV2 On # Allow SSLv2 connections (Default is Off)

SSLV3 On # Allow SSLv3 connections (Default is On )

TLSV1 On # Allow TLSv2 connections (Default is On )

}

}

###################################################################

# #

# Network Security Services #

# #

###################################################################

# Rule for Network Security Server.

TTLSRule NS_Server_App

{

LocalPortRange 4159

Direction Inbound

TTLSGroupActionRef grp_FTP

TTLSEnvironmentActionRef NS_Server_Env

}

TTLSEnvironmentAction NS_Server_Env

{

HandshakeRole Server

TTLSKeyRingParms

{

Keyring Server_Ring

}

TTLSCipherParmsRef RequireEncryption

}

# Rule for Network Security clients.

# Each client must own their own key ring named Client_Ring.

TTLSRule NS_Client_App

{

RemotePortRange 4159 # Server port

Direction Outbound

TTLSGroupActionRef grp_FTP

TTLSEnvironmentActionRef NS_Client_Env

}

TTLSEnvironmentAction NS_Client_Env

{

HandshakeRole Client

TTLSKeyRingParms

{

Keyring Client_Ring

}

TTLSCipherParmsRef RequireEncryption

}

# Set of TLS Ciphers with Encryption

TTLSCipherParms RequireEncryption

{

V3CipherSuites TLS_AES_128_GCM_SHA256

V3CipherSuites TLS_AES_256_GCM_SHA384

V3CipherSuites TLS_CHACHA20_POLY1305_SHA256

V3CipherSuites TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

V3CipherSuites TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

V3CipherSuites TLS_DHE_RSA_WITH_3DES_EDE_CBC_SHA

V3CipherSuites TLS_DHE_DSS_WITH_3DES_EDE_CBC_SHA

V3CipherSuites TLS_DH_RSA_WITH_3DES_EDE_CBC_SHA

V3CipherSuites TLS_DH_DSS_WITH_3DES_EDE_CBC_SHA

V3CipherSuites TLS_RSA_WITH_3DES_EDE_CBC_SHA

V3CipherSuites TLS_DHE_RSA_WITH_AES_256_CBC_SHA

V3CipherSuites TLS_DHE_DSS_WITH_AES_256_CBC_SHA

V3CipherSuites TLS_DH_RSA_WITH_AES_256_CBC_SHA

V3CipherSuites TLS_DH_DSS_WITH_AES_256_CBC_SHA

V3CipherSuites TLS_RSA_WITH_AES_256_CBC_SHA

V3CipherSuites TLS_DHE_RSA_WITH_AES_128_CBC_SHA