This blog was originally published on January 23, 2017.

Compression of data in IBM Spectrum Virtualize environment may be a good way to gain back capacity, but there can be hidden performance problems if compressible workloads are not first identified. Visualizing these workloads is key to determining when and where to successfully use compression. In this blog, we help you with identifying the right workloads so that you can achieve capacity savings in your IBM Spectrum Virtualize environments without compromising performance.

Today, all vendors have compression capabilities built into their hardware. The advantage of compression is that you need less real capacity to service the needs of your users. Compression reduces your managed capacity, directly reducing your storage costs.

For the IBM Spectrum Virtualize environment, IBM has a utility called Comprestimator for identifying data that is highly compressible, which it does by scanning the byte patterns and looking for repeating patterns. This utility is an excellent tool for identifying data that compress well. Currently, it must be run on the hosts connected to the IBM Spectrum Virtualize storage, but IBM is working on integrating this utility into IBM Spectrum Virtualize.

Certain data types, such as images, are already compressed and do not compress further, making them poor candidates. However, many other data types, such as general database files, compress extremely well. By running the IBM Comprestimator tool on your environment, you may quickly identify those data that is compressible and see an estimate of how much space you will save. In our experience, average savings are over 50%. While the compressibility of the data is important, it is not the only factor that must be considered, as the Comprestimator tool knows nothing about the access patterns of your workloads. These access patterns directly relate to the expected performance outcomes of accessing compressed data.

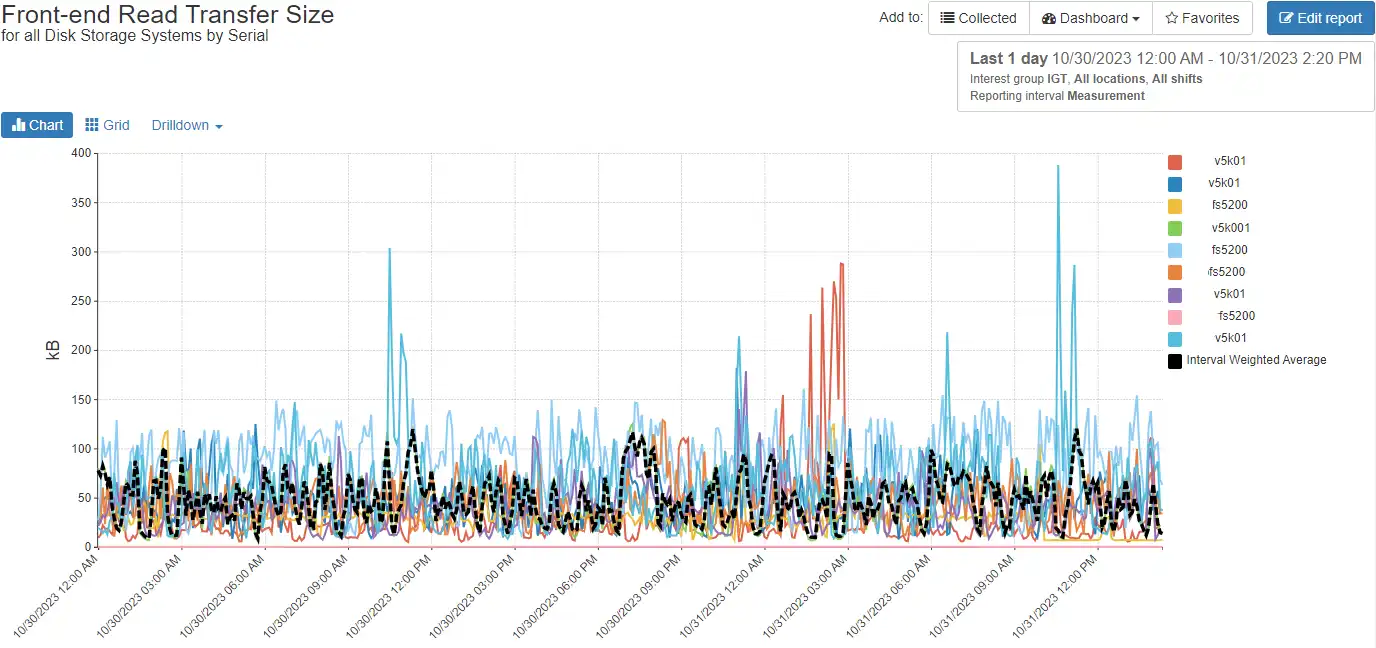

At this time, we cannot explain why the increase in transfer sizes doesn’t scale linearly with the response time, but it is likely due to queuing for processor resources. lead to significant I/O latency when performing large access requests on compressed data. In our experience, we have observed non-linear latency behavior beginning at around 32 KBytes per transfer. We believe this is a good current rule of thumb, but that may change over time. Since many workloads are composed of applications that perform large transfers, we suggest a careful study of the I/O access patterns of your applications prior to implementing compression on a global scale. To do that, IntelliMagic’s Vision software is ideally suited to visualize and drill into your various workloads.

The following image, taken from IntelliMagic Vision, shows the average transfer size for a workload running in an IBM Spectrum Virtualize environment. These volumes are not compressed.

Based on analysis of the workload using IntelliMagic Vision that compressing the volumes supporting this workload would have resulted in I/O latencies that were much higher than the I/O latencies to the existing non-compressed volumes. Thus, a decision to use compression based on capacity savings alone is not sufficient criteria.

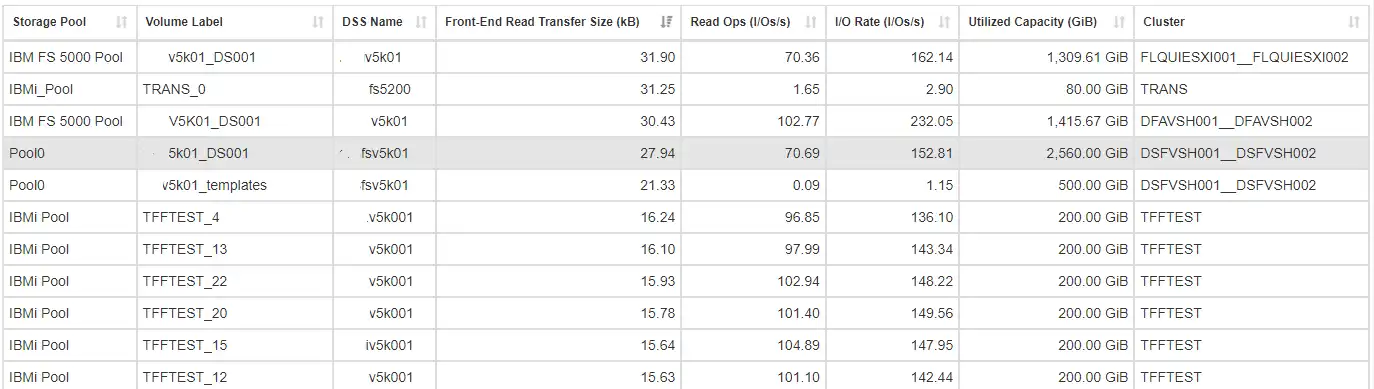

The following report, which was created using IntelliMagic Vision, shows those workloads that would be good candidates for compression based on the transfer size and the workload:

If the average transfer size is less than 32 Kilobytes or the I/O activity is insignificant, then the workload is appropriate for compression.

In conclusion, it is critical to consider the access patterns of your workloads before enabling compression in your IBM Spectrum Virtualize environments. Otherwise, you may be trading a capacity issue for a performance problem. To get a no-obligation evaluation of your workloads to identify those that are good candidates for compression, contact us and we’ll get in touch with you.

This article's author

Share this blog

Related Resources

Noisy Neighbors: Discovering Trouble-makers in a VMware Environment

Just a few bad LUNs in an SVC all flash storage pool have a profound effect on the I/O experience of the entire IBM Spectrum Virtualize (SVC) environment.

Improve Collaboration and Reporting with a Single View for Multi-Vendor Storage Performance

Learn how utilizing a single pane of glass for multi-vendor storage reporting and analysis improves not only the effectiveness of your reporting, but also the collaboration amongst team members and departments.

A Single View for Managing Multi-Vendor SAN Infrastructure

Managing a SAN environment with a mix of storage vendors is always challenging because you have to rely on multiple tools to keep storage devices and systems functioning like they should.

Request a Free Trial or Schedule a Demo Today

Discuss your technical or sales-related questions with our availability experts today

Brett Allison

Brett Allison